August 07, 2023

4 min read

We Have A Small Data Problem Not A Big Data Problem

Over the past decade, Big Data has taken center stage as a mega trend. The industry has grown enormously to provide new technologies, tools, platforms & analytics.

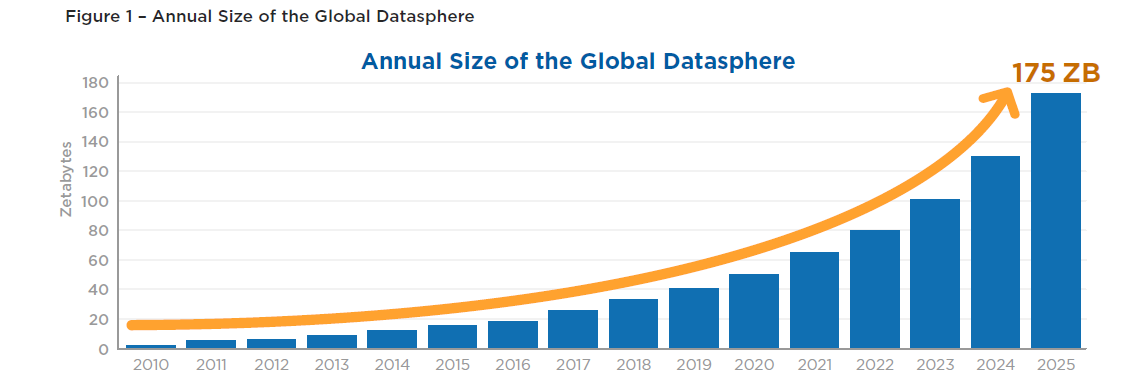

The International Data Corporation (IDC) forecasts 175 zettabytes of data will be created by 2025. One zettabyte is equal to one sextillion bytes, that is, 10^21 (1,000,000,000,000,000,000,000) bytes, which is a trillion gigabytes.

Can you pause for a second and at least attempt to quantify or visualize this in your mind?

As a comparison point, the Library of Congress (the largest library in the world) had 20 Petabytes of storage as of 2019.

We can safely establish as ground truth the fact that data is growing at an unprecedented pace and will continue to do so. However, we must also pause to ask ourselves a few questions:

- What are we going to do with all this data?

- Is a bulk of this data just going to sit in secondary/tertiary storages in data centers while adding to the carbon footprint?

- Does all of this "Big Data" follow the 80/20 Pareto principle which means only 20% of the data is valuable..i.e. signal? What about the other 80%..is it noise?

- While GPT trained on an enormous dataset corpus, wasn't it only fed quality data which required removal of a lot of noise?

- As a simpler example, we often take several shots of the same photo on our phone. So for example, if you shoot 10 photos (each say 5MB) but only ever need one perfect shot, didn't you just create 50MB of data but only 5MB of that data was actually of value and importance to you?

In this post, we want to present an alternate view to the "Big Data" trend. We'd like to make the case that we are facing a small data problem and not a big data problem.

Don't take our word for it. See what "Jordan Tigani" one of the founding engineers of Google's "BigQuery" had to say in this excellent article titled "Big Data Is Dead".

Jordan makes some compelling arguments worth quoting:

"Most of the people using “Big Query” don’t really have Big Data. Even the ones who do tend to use workloads that only use a small fraction of their dataset sizes."

"The era of Big Data is over. It had a good run, but now we can stop worrying about data size and focus on how we’re going to use it to make better decisions."

"I can say that the vast majority of customers had less than a terabyte of data in total data storage."

"The general feedback we got talking to folks in the industry was that 100 GB was the right order of magnitude for a data warehouse."

"All large data sets are generated over time. Time is almost always an axis in a data set. Most analysis is done over the recent data. Scanning old data is pretty wasteful; it doesn’t change, so why would you spend money reading it over and over again?"

"Dashboards, for example, very often are built from aggregated data. People look at the last hour, or the last day, or the last week’s worth of data. Smaller tables tend to be queried more frequently, giant tables more selectively."

"A couple of years ago I did an analysis of BigQuery queries, looking at customers spending more than $1000 / year. 90% of queries processed less than 100 MB of data."

"Tricks like computing over compressed data, projection, and predicate pushdown are ways that you can do less IO at query time. And less IO turns into less computation that needs to be done, which turns into lower costs and latency."

"There are acute economic pressures incentivizing people to reduce the amount of data they process."

"An alternate definition of Big Data is when the cost of keeping data around is less than the cost of figuring out what to throw away."

"Data can be an aid to lawsuits against you. Keeping old data around can prolong your legal exposure (e.g. GDPR/HIPAA compliance)."

"If you are keeping around old data, it is good to understand why you are keeping it. Are you really just a data hoarder?"

Big Data was never the limiting factor for gaining actionable insights on real world problems.

Actionable insights come from the micro not the macro. Less is more.

You have to look for the "Needle In A Haystack" and the "Haystack" is often just "Small Data" not "Big Data".